Gaming has captured a generation of youth, and with them many adults, who for years have been donning headsets in front of their computers, immersing themselves in and interacting with virtual worlds such as Minecraft or the newer Half-Life: Alyx. Advances in technology have augmented this immersive experience by placing users in a real-world setting overlaid by a virtual experience, allowing them to, for example, go on a Pokémon hunt (Pokémon GO) or enter the world of dinosaurs (Jurassic World Live). The immersive experience becomes even more fully blended when virtual and augmented realities are mixed to create a fully interactive environment, as users experience in the updated Demeo.

Gaming has captured a generation of youth, and with them many adults, who for years have been donning headsets in front of their computers, immersing themselves in and interacting with virtual worlds such as Minecraft or the newer Half-Life: Alyx. Advances in technology have augmented this immersive experience by placing users in a real-world setting overlaid by a virtual experience, allowing them to, for example, go on a Pokémon hunt (Pokémon GO) or enter the world of dinosaurs (Jurassic World Live). The immersive experience becomes even more fully blended when virtual and augmented realities are mixed to create a fully interactive environment, as users experience in the updated Demeo.

Explore This Issue

May 2024Virtual (VR), augmented (AR), and mixed realities (MR) all fall under the umbrella of extended reality (XR) and are characterized by extending a user’s experience into different worlds via technology that simulates those realms. Although examples of technologies employing XR go back many decades, it was only in 1987 that the term “virtual reality” and in 1990 that “augmented reality” were coined; it wouldn’t be for another couple of decades that advances in XR technologies made “realities” more widely available and attractive across industries beyond their gaming. As described in a 2021 Forbes article, by 2020, XR had been adopted by industries as diverse as education, healthcare, and manufacturing and construction (Marr B. The fascinating history and evolution of extended reality (XR). Forbes. May 17, 2021. Available at forbes.com.)

In healthcare, currently, one of the main uses of XR occurs during surgery. A recent scoping review of hundreds of studies of the use of XR in surgery found the most evidence on VR for surgical training and preoperative preparation and on AR as a supplement to intraoperative guidance and imaging (Int Orthop. 2023;47:611-621). The authors note that technological advances in hardware and software are fueling the growth of XR-assisted surgery, making this technology more affordable, usable, well validated, and acceptable.

A 2023 systematic review of immersive technologies in medical education found that most studies reported on the use of VR and AR for surgical training and anatomical education, and that these immersive technologies were as effective as traditional teaching methods at generating positive learning outcomes and could be a more cost-effective way of teaching students (Med Sci Educ. 2023;33:275-286). Similar findings were reported in another systematic review showing that these immersive technologies for surgical training resulted in a substantial increase in surgical procedure ability scores and a decrease in surgery duration (Educ Res Rev. 2022;35:100429). The review highlighted the challenges to implementing immersive technologies in medical education, including the cost and technological assistance required, as well as the need for design improvements to minimize any detrimental effects these technologies might have on users, which can include dizziness.

Otolaryngology

Maya G. Sardesai, MD, MEd, associate professor and associate residency program director in the department of otolaryngology–head and neck surgery at the University of Washington School of Medicine in Seattle, said the use of XR in surgical training helps otolaryngologists learn difficult surgical tasks in a safe way without placing patients at risk and without the need for cadavers or animals. At her institution, she and colleagues incorporate VR into an annual boot camp for new residents to help them learn skull base anatomy. “The skull base can be complicated, with small spaces, and traditional approaches with computed tomography scans and scopes are limited because they offer only a two-dimensional view,” she said. With VR, “you get a better understanding and appreciation of this anatomy through a three-dimensional view that allows you to look inside the different spaces and see the nerves, blood vessels, and other tissues that lie within them,” she added.

With VR, “you get a better understanding and appreciation of this anatomy through a three-dimensional view that allows you to look inside the different spaces and see the nerves, blood vessels, and other tissues that lie within them.” —Maya G. Sardesai, MD, MEd

Along with the benefit of training students on complicated otolaryngologic anatomy, she said VR and AR are also useful for training students on uncommon anatomy such as cranio-facial or congenital anomalies that require specific surgical planning. She noted that interest in AR is growing, as VR can result in motion sickness, which can affect up to 30% of users.

Eric Gantwerker, MD, MSc, MS, a pediatric otolaryngologist at Northwell Health in New Hyde Park, N.Y., sees many benefits to using XR in otolaryngologic training, among them the ability for trainees to learn at their own pace on their own time and the opportunity to allow for remote training in real time.

We should think critically about the advantages of XR and where it is worth the cost for implementation. We should build to scale instead of having a ton of one-off pilots and proof of concepts that never go anywhere. — Eric Gantwerker, MD, MS

He is currently investigating the use of XR for telecollaboration, in which clinicians across long distances can collaborate via a real-time video and audio feed directly to the point of care. He listed several areas in which otolaryngologists are using XR for otolaryngologic surgical training, including anatomic virtual dissections, patient-specific surgical preparation, operative live surgical guidance, and procedural training with sinus task trainers and temporal bone drilling.

Dr. Gantwerker cautioned, however, that although the technology has tremendous potential, it still comes with many challenges. Among them are cost, technical problems with hardware and software, and inconsistent quality ranging from clunky and unrealistic software to poor educational implementation (using the technology for technology’s sake without realizing the value added with XR). “We should think critically about the advantages of XR and where it is worth the cost for implementation,” he said. “We should build to scale instead of having a ton of one-off pilots and proof of concepts that never go anywhere.”

Dr. Gantwerker noted that the research is still sparse on the effectiveness of XR in otolaryngology and otolaryngologic training, on patient-level outcomes, and in assessments of its return on investment. In a 2022 scoping review of the current state of XR use in otolaryngology, of which he is senior author, he and his colleagues highlighted the need for more studies that, for example, assess high-level learning outcomes with XR and deliberate testing of AR and MR applications in otolaryngology (the review found that all studies to date were limited to VR) (Laryngoscope. 2023;133:227-234). The authors also encouraged the development of a shared definition for XR technology.

David W. Chou, MD, assistant professor of facial plastic and reconstructive surgery in the department of otolaryngology–head and neck surgery at Atlanta’s Emory University, also pointed to accessibility and cost as the biggest hurdles to implementing XR in otolaryngologic practice, and underscored the need for a “critical eye” on how to use these technologies in a way that actually impacts outcomes and to recognize that “they are simply tools to aid us but never to solely rely on.”

I would like my peers to embrace new technologies like XR, as it is definitely exciting and has broad applications which are yet to be completely explored. —David W. Chou, MD

He emphasized the potential benefits, as described in a 2023 scoping review he and his colleagues published on the use of XR in facial plastic surgery (Laryngoscope. Published online November 10, 2023. doi: 10.1002/lary.31178). The review included 31 feasibility studies and 21 studies in which XR was being used intraoperatively on actual patients. Evidence from these latter studies showed that most of the intraoperative use was with AR to project, for example, planned osteotomy lines, vascular anatomy, and ideal contour onto the surgical field in patients ranging from those requiring reconstructive surgery due to trauma to those needing free tissue transfer. “This could allow surgeons to know where the critical anatomy lies in relation to their surgical instrument or overlay reference images while a surgeon contours bone of the face, for example,” said Dr. Chou. Potential benefits, he said, include using VR headsets on patients to distract them during surgery, which, in essence, is using XR as a form of analgesia.

Another potential emerging use is adopting AR for surgical navigation during facial plastic surgery and free flap harvest. “Our review found a lot of really exciting and creative applications of XR in our field,” Dr. Chou said. “I would like my peers to embrace new technologies like XR, as it is definitely exciting and has broad applications which are yet to be completely explored.”

New Frontier of Medicine

Mark Zhang, DO, MMSc, refers to XR in healthcare as the new frontier in medicine. He is the founder and president of the American Medical Extended Reality Association (AMXRA), an association formed in 2022 in recognition of the widening and potential use of XR in medicine. In a 2023 webinar sponsored by the AMA on the applications of XR in healthcare, he spoke on the creation of a new specialty—medical extended reality (MXR)—and the foundation and mission of AMXRA, its growing interdisciplinary members, and initiatives that include professional development, education, and research in XR (Extended reality could be the next frontier in health care. August 17, 2023. Available at ama-assn.org).

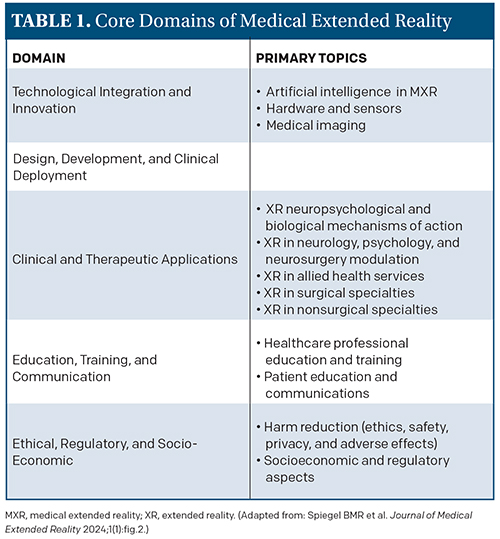

As part of its educational mission, AMXRA is working with regulatory groups such as the U.S. Food and Drug Administration to create standards and guidelines for MXR. Toward this end, the association recently published a study laying out a standard framework to categorize the diverse research and applications of XR in healthcare (J Med Ext Real. 2024;1:4-12). Published in the first issue of the Journal of Medical Extended Reality (JMXR), the official journal of AMXRA, the framework lays out five core domains covering 13 primary topics and 180 secondary topics (see Table 1).

As part of its educational mission, AMXRA is working with regulatory groups such as the U.S. Food and Drug Administration to create standards and guidelines for MXR. Toward this end, the association recently published a study laying out a standard framework to categorize the diverse research and applications of XR in healthcare (J Med Ext Real. 2024;1:4-12). Published in the first issue of the Journal of Medical Extended Reality (JMXR), the official journal of AMXRA, the framework lays out five core domains covering 13 primary topics and 180 secondary topics (see Table 1).

Brennan Spiegel, MD, MSHS, lead author of the study, editor-in-chief of JMXR, and professor of medicine and director of Health Services Research at Cedars-Sinai in Los Angeles, cited the need for such a taxonomy given the lack of a universal language with which to classify and communicate the diverse research and breakthroughs using MXR that have been published in more than 20,000 studies to date. “Our study addresses this crucial gap by introducing a detailed framework that categorizes the vast array of research and applications within MXR,” he said in a press release accompanying the launch of the new journal (Defining the future language of medicine. Cedars Sinai. Published February 13, 2024. Available at cedars-sinai.org/newsroom).

Dr. Spiegel called the taxonomy a “living document,” emphasizing that it will continue to change with new developments and insights.

Artificial Intelligence and XR

Artificial intelligence (AI) can play a role in XR, but the two are not truly integrated in the sense of one being dependent on the other, said Dr. Gantwerker. “There are applications of AI that come into the XR world, such as, during surgery, using XR software where AI is using computer vision to detect and overlay imaging data onto the patient in real time,” he said.

Currently in otolaryngology, he said, the use of AI in XR is still in its infancy, primarily existing behind the scenes of some of the technologies, and isn’t accessible or relevant yet for most otolaryngologists.

But, as reflected in the taxonomy above, how AI is integrated with XR is an area under investigation and one that, according to a 2021 systematic review, may provide additional benefits for the effectiveness of both technologies (Front Virtual Real. Published September 7, 2021. doi: 10.3389/frvir.2021.721933). The review found medical training to be one of the main applications for the combination of AI–XR, and a key benefit of this combination would be in developing metrics to assess user skill and performance. For example, AI could be fed data generated from an XR tool or user to help develop metrics to determine the most relevant skill assessment features. Another potential benefit of the combined technology is using automated AI-based imaging to provide visualization of target parts of structures to increase the efficiency of XR.

Overall, the review described two main objectives for the combination of AI–XR and underscored the future prospects of their interrelationship in a number of industries, including medicine. (See “Two Main Objectives Combining AI-XR.”)

Going forward, the integration of AI with XR is yet another area in which AI is likely to be applied in medicine. As with all potential applications of AI, its benefits will need to be continually weighed against its limitations and risks. Efforts to create standards and guidelines for the adoption of XR in medicine will help with the integration of AI where appropriate.

Mary Beth Nierengarten is a freelance medical writer based in Minnesota.

AI serving and assisting XR

• Detect patterns of XR-generated data using machine learning methods.

• Improve XR experience using neural networks and natural language processing methods.

XR serving and assisting AI

XR can generate AI-ready data for training AI systems:

• All training can occur in XR without collecting data from the physical world.

• Learning in XR is fast.

• XR permits AI developers to simulate novel cases for training AI systems.

XR to improve AI performance:

• Validate results and verify hypotheses generated by AI systems.

• Offer advanced visualization of AI structure.