“GPT (Generative Pre-trained Transformer) is a type of natural language processing model developed by OpenAI. It is trained to predict the next word in a sequence of words by processing large amounts of text data. One potential use of GPT in patient health education is to generate personalized educational materials based on a patient’s specific health condition and needs. For example, a chatbot powered by GPT could interact with a patient and ask questions about their health history and current condition, and then use this information to generate educational materials specifically tailored to the patient. This could include information about treatment options, lifestyle changes that may be helpful, or general information about the patient’s condition. By providing personalized and easily accessible health education, a chatbot powered by GPT could potentially help patients better understand their health and make more informed decisions about their care.”

“GPT (Generative Pre-trained Transformer) is a type of natural language processing model developed by OpenAI. It is trained to predict the next word in a sequence of words by processing large amounts of text data. One potential use of GPT in patient health education is to generate personalized educational materials based on a patient’s specific health condition and needs. For example, a chatbot powered by GPT could interact with a patient and ask questions about their health history and current condition, and then use this information to generate educational materials specifically tailored to the patient. This could include information about treatment options, lifestyle changes that may be helpful, or general information about the patient’s condition. By providing personalized and easily accessible health education, a chatbot powered by GPT could potentially help patients better understand their health and make more informed decisions about their care.”

Explore This Issue

May 2023The entire paragraph above was generated by ChatGPT after asking it to “describe the potential role of ChatGPT in patient health education.”

Recently, this new iteration of OpenAI’s GPT has taken the online community by force, spurring controversy and speculation about the future of education, healthcare, and whether artificial intelligence (AI) will encroach on existing workplace opportunities. Can patients leverage this technology to better understand their own health, and should healthcare providers trust these services as an ancillary tool for patient health education?

Search Specifics

Traditionally, search engines have responded to queries by ranking the available sites and resources by the number of relevant keywords, citations, visits from other users, and other factors. These computations result in a ranked list that appears as your standard search output. Studies have shown that some patient populations have worsened health anxiety after searching online about their health (Psychosomatics. 2016;57:390–400). While patients may take care to use validated sites, they have access to the whole breadth of medical knowledge about a specific health condition without necessarily having the training to understand it. These search results frequently lack context and interpretation, leading patients to form incomplete and, unfortunately, incorrect conclusions about their health.

Presenting a single set of paragraphs instead of an unending list of websites in response to a question may be less intimidating to a patient looking to learn about their health.

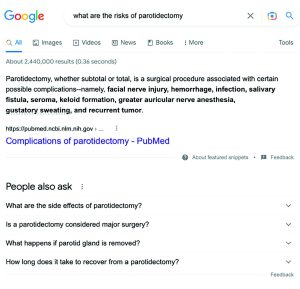

ChatGPT generates a cohesive, easy-to-understand block of text that offers both an overview and information on the posed query. We queried a search engine and ChatGPT about the risks of parotidectomy to see how each platform handled the question. The search engine was able to suggest relevant risks in the context of a cited article regarding the complications of the surgery. Further, it was able to suggest follow-up questions and answers through a drop-down menu (Figure 1). This can help patients easily flow through a series of questions pertaining to their original query.

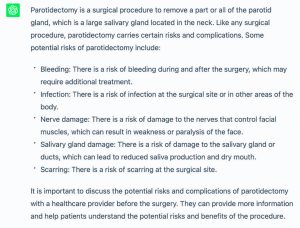

In contrast, ChatGPT was able to string together a text block that discussed relevant side effects separated by category (e.g., neurologic, infectious, cosmetic, vascular) and emphasized the importance of consulting a licensed healthcare provider (Figure 2).

Benefits and Drawbacks

GPTs represent an inflection point in the evolution of the internet. This latest iteration from OpenAI demonstrates remarkable language generation capability and is unique in its ability to synthesize and contextualize information based on queries. Presenting a single set of paragraphs instead of an unending list of websites in response to a question may be less intimidating to a patient looking to learn about their health.

ChatGPT also has notable limitations preventing it from being a viable resource for patient education, however. First, even when asked directly, ChatGPT is unable to present the sources from which its answers were obtained. This known problem with AI will ultimately limit ChatGPT’s utility in the healthcare space (Otolaryngol Head Neck Surg. 2019;160:603–611).

Further, the answers generated depend solely on the queries inputted. If a patient doesn’t think to ask about a specific feature of a disease, they may not learn about it if they only use ChatGPT. A search engine’s advantages over a GPT are two-fold: 1) They provide frequently asked follow-up questions after the initial response, and 2) They provide links to websites and articles containing more information. This enables patients to investigate further when more information is needed.

Overall, ChatGPT presents an exciting new frontier in search engines and AI. Patients of otolaryngologists may use ChatGPT to easily understand the features of a procedure or disease that pertains to them. ChatGPT is unique in its ability to summarize and contextualize text in an approachable way that may not incite health anxiety. Generative pre-trained transformers like ChatGPT may continue to grow in popularity with patients. It’s important for our colleagues in both otolaryngology and across the field of medicine to be aware of its impact on patient care.

Dr. Robert Brody is an assistant professor of otorhinolaryngology–head and neck surgery at the University of Pennsylvania specializing in head & neck oncology and microvascular reconstructive surgery. Manan Parekh is completing his medical degree at the Temple University Lewis Katz School of Medicine in Philadelphia and is currently a research fellow within the ORL-HNS Department at the University of Pennsylvania.

Figure 1. Screen capture of search results to the question, “What are the risks of parotidectomy?”

Figure 2. Screen capture of a query to ChatGPT asking, “What are the risks of parotidectomy?”